Research

I apply Resource Allocation and

market

design methods to real settings, including Kidney

Exchange and Blood Donation. This

work combines theory (such as matching and complexity analysis), empirical methods (such as

data-driven simulations), and real-world experiments. To ensure that these systems align with

stakeholder interests, I also study AI and Human Decision

Making. My academic advisor is John P Dickerson.

I apply resource allocation and market design methods to real settings, including Kidney Exchange and Blood Donation.

Patients with kidney failure have only two options: a lifetime on dialysis, or kidney

transplantation. Dialysis is far more expensive and burdensome than transplantation,

however

donor kidneys are scarce — on average, 20 people die each day in

the US while waiting for a

transplant.

Furthermore, many patients in need of a kidney have willing living donors, but

cannot undergo

transplantation due to medical incompatibilities.

To address this supply-demand mismatch, kidney exchange allows patients with willing

living

donors to swap their donors in order to find a compatible (or better) patient-donor

match.

Formulated as an optimization problem, kidney exchange is NP-hard and APX-hard,

though modern

exchanges are solvable in a reasonable amount of time.

Show more ∨

Show Less ∧

Robustness to Uncertainty

There are many sources of uncertainty in real kidney exchanges—due to medical, moral,

and policy

factors. These sources of uncertainty are difficult to characterize, and can severely

impact the

outcome of an exchange. We investigate techniques from robust optimization to address

this problem.

Publications:

-

Duncan C McElfresh, John P Dickerson, Ke Ren, and Hoda Bidkhori.

"Distributionally

Robust Cycle and Chain Packing With Application To Organ Exchange." 2021 Winter

Simulation Conference (WSC).

-

Duncan C McElfresh, Michael Curry, Sarah E Booker, Morgan Stuart, Darren

Stewart,

Ruthanne Leishman, Tuomas

Sandholm, and John P Dickerson. "Who can be matched via kidney exchange?"

[abstract]. Am

J Transplant. 2021; 21 (suppl 3). Abstract presented as a poster at the 2021

American Tranpsplant Congress (ATC).

-

Duncan C McElfresh, Michael Curry, Sarah E Booker, Morgan Stuart, Darren

Stewart,

Ruthanne Leishman, Tuomas

Sandholm, and John P Dickerson. "Improving Policy-constrained Kidney Exchange Via

Pre-screening." Am J Transplant. 2021; 21 (suppl 3). Abstract presented as a

talk at

the 2021 American Tranpsplant Congress (ATC).

- McElfresh, Duncan C, Michael Curry,

Tuomas Sandholm,

and John P Dickerson, "Improving Policy-Constrained Kidney Exchange via

Pre-Screening.”

Advances in Neural Information Processing Systems 33: Annual Conference on

Neural

Information Processing Systems (NeurIPS), 2020.

-

Bidkhori, Hoda, John P Dickerson, Ke Ren, and Duncan C

McElfresh. “Kidney exchange with Inhomogeneous Edge Existence Uncertainty.”

Conference on Uncertainty in Artificial Intelligence (UAI). 2020.

-

McElfresh, Duncan C, Hoda Bidkhori, and John P Dickerson, "Scalable

Robust Kidney

Exchange.” Conference on Artificial Intelligence

(AAAI), 2019.

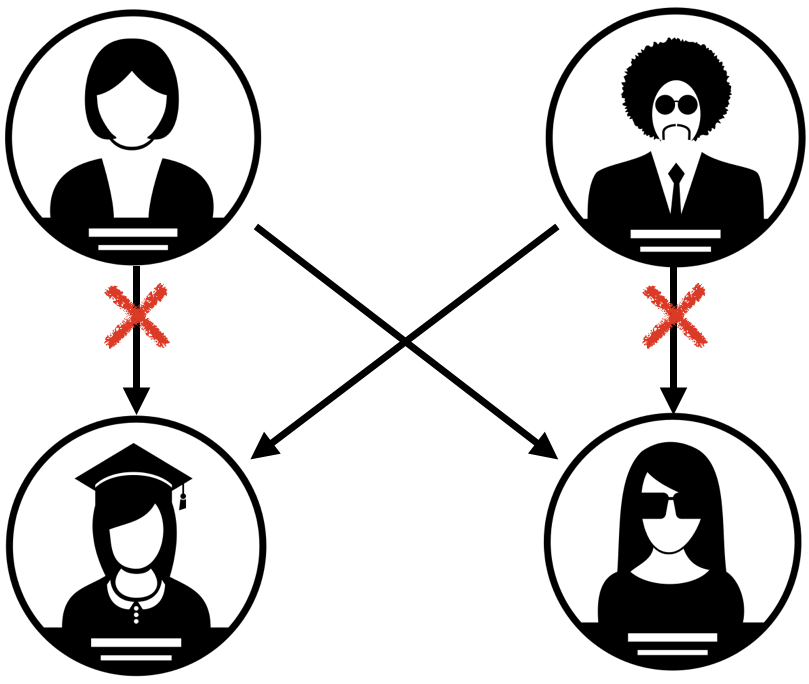

Fairness in Kidney Exchange

How can we prioritize marginalized patients, without severely impacting the overall

exchange? We

study several different methods for enforcing this notion of fairness, and

demonstrate their

effects on data collected from real exchanges.

Publications:

-

McElfresh, Duncan C, and John P Dickerson, "Balancing

lexicographic fairness and a utilitarian objective with application to kidney

exchange."

Conference on Artificial Intelligence (AAAI), 2018.

Ethics and Kidney Exchange

Designing a kidney exchange program requires input from medical professionals,

policymakers,

computer scientists, and ethicists. A "good" program should be both technically- and

morally-sound — however technical experts (e.g., computer scientists) and

stakeholders (e.g.,

medical professionals) often work independently. We propose a formal division of

labor between

technical experts and stakeholders, and outline a framework through which these

experts can

collaborate. Through this framework we analyze existing kidney exchange programs and

survey the

technical literature on kidney exchange algorithms. We identify areas for future

collaboration

between technical experts and stakeholders.

Relevant work:

-

Presentation: McElfresh, Duncan C, Patricia Mayer, Gabriel

Schnickel, and

John P Dickerson. "Ok Google: Who Gets the Kidney?": Artificial Intelligence

and

Transplant Algorithms. Panel presentation and discussion at the annual

meeting of

the American Society of Bioethics and Humanities (ASBH), Anaheim, CA. (slides)

Immunosuppression

Recent advances in immunosuppression treatments allow some patients to receive a kidney

transplant from donors who are otherwise medically incompatible. We develop a

theoretical

model for this setting, and provide an optimization framework for a wide variety of

objectives (e.g., prioritizing certain types of patients or transplants). Simulations

indicate that even a small number of immunosuppressants (10 or 20) can double

the

number of transplants facilitated by real-sized exchanges with hundreds of patients.

Publications:

-

Haris Aziz, Ágnes Cseh, John P Dickerson, and Duncan C McElfresh. “Optimal

Kidney

Exchange with Immunosuppressants.”

Conference on Artificial Intelligence (AAAI). 2021.

Show Less ∧

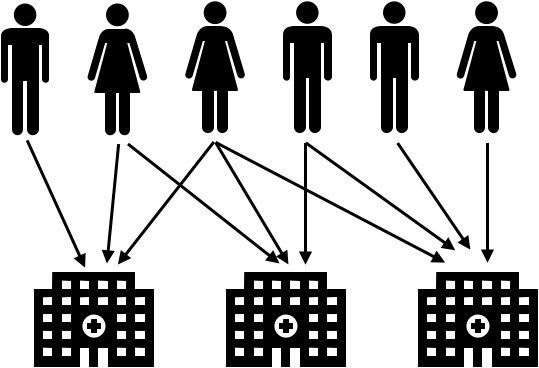

Blood is a scarce resource that can save the lives of those in need, and managing the

blood

supply chain has been a topic of research for decades. We consider an aspect of the

blood supply

chain that is seldom addressed by the literature: coordinating a network of donors

to meet

demand from a network of recipients.

Show more ∨

Show Less ∧

In a collaboration with Facebook, we deployed the first large-scale algorithmic system

for

matching blood donors with recipients. We focused on the Facebook

Blood Donation Tool, a platform that connects prospective blood donors with

nearby

recipients. There are many objectives in this setting, including: increasing the number

of

blood donations, treating recipients fairly, and respecting user preferences. To

formalize

these goals we developed an online matching framework, and matching policies for

automatic

donor notification. Both simulations and a fielded experiment demonstrate that our

methods

increase expected donation rates by 5% which—when

generalized to the entire Blood Donation Tool—corresponds to an increase in tens of

thousands of donations every few months.

Relevant work:

-

McElfresh, Duncan C, Christian Kroer, Sergey Pupyrev, Eric

Sodomka, Karthik Abinav Sankararaman, Zack Chauvin, Neil Dexter, John P

Dickerson. “Matching

Algorithms for Blood Donation” Under review at PNAS. An earlier version appeared at

The 21st ACM Conference on Economics and

Computation

(EC), 2020.

-

Poster and Presentation: McElfresh, Duncan C, Christian Kroer,

Sergey

Pupyrev, Eric Sodomka, John P Dickerson. “Matching Algorithms for Blood

Donation.”

Workshop on Mechanism Design for Social Good MD4SG, 2019.

Show Less ∧

AI and Human Decision Making

AI is increasingly used to influence, or make, important decisions in a wide range of domains,

including medicine, education, criminal justice, and financial services. Indeed, two key

examples

are kidney exchange and blood donation. I study the interaction between

stakeholders and algorithmic decision tools, with an eye toward developing responsible decision

support tools.

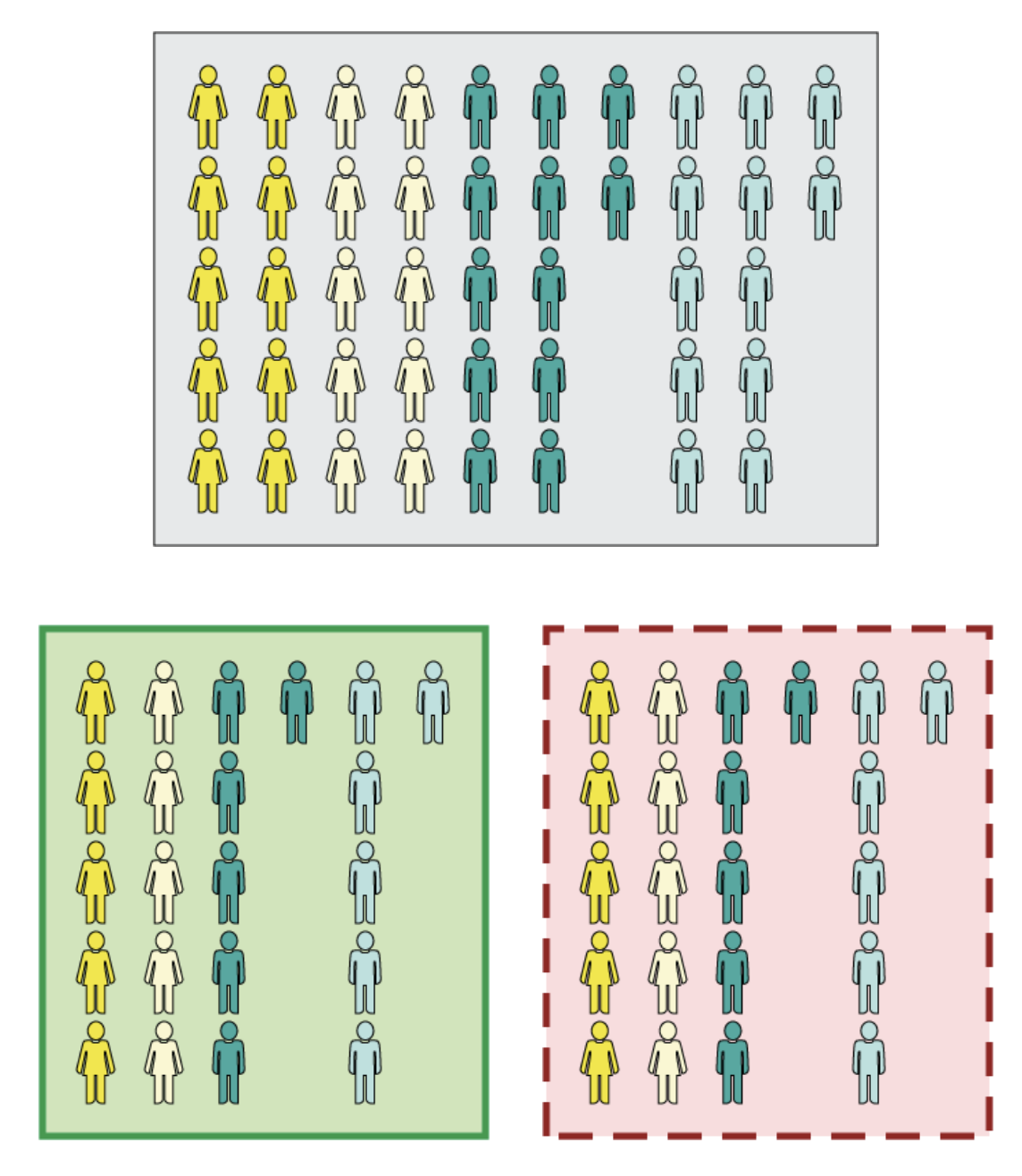

AI and ML researchers have proposed several mathematical definitions of fairness, however it is

not

clear if stakeholders understand or agree with these notions. We develop and validate a

comprehension score to measure peoples’ understanding of mathematical fairness. Using a

hypothetical decision scenario related to hiring, we translate several mathematical fairness

definitions into “rules” that a hiring manager must follow. Using our comprehension

score, we find that most people do not understand these rules, and those who do often disagree

with

them. This raises questions about the usefulness of algorithmic fairness: can an AI system be

truly “fair” if its stakeholders do not understand its behavior?

Publications ∨

Publications ∧

-

Saha, Debjani, Candice Schumann, Duncan C McElfresh, John P

Dickerson, Michelle L Mazurek and Michael Carl Tschantz. “Measuring Non-Expert

Comprehension

of Machine Learning Fairness Metrics.” Proceedings of the Thirty-seventh

International

Conference on Machine Learning (ICML). 2020.

-

Saha, Debjani, Candice Schumann, Duncan C McElfresh, John P Dickerson,

Michelle L

Mazurek and Michael Carl Tschantz. “Human Comprehension of Fairness in Machine

Learning." AAAI/ACM

Conference on Artificial Intelligence, Ethics, and Society (AIES),

2020.

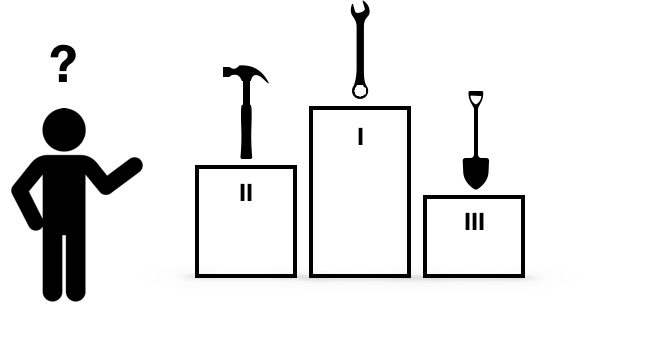

Many modern

AI methods, are guided by models of stake-

holder preferences, which are often learned

through observed decisions (such as product

purchases) or through hypothetical decisions

(e.g., surveys). There are many cases where

stakeholders may be unwilling to express a

preference: for example, if more information

is needed to arrive at a decision, or of all available options are bad; in these cases we say

they

are indecisive. Drawing from moral philosophy

and psychology, we develop a class of indecision models, which can be fit to observed data; in

two

survey studies we that indecision is common,

and several causes of indecision are plausible. This raises many questions for the use of AI in

decision making: from a theoretical perspective, how should we aggregate indecisive voters if

indecision has multiple meanings? From an empirical perspective, how can we identify an

indecisive

agent, and what characteristics of a decision scenario lead to indecision?

Publications ∨

Publications ∧

-

McElfresh, Duncan C, Lok Chan, Kenzie Doyle, Walter Sinnott-Armstrong,

Vincent

Conitzer, Jana Schaich Borg,John P Dickerson. “Indecision Modeling.”

Conference on Artificial Intelligence (AAAI). 2021. (poster)

Using a survey study we simulate the effect of an AI tool on decision making: we suggest random

predictions of participant preferences, and we attribute this prediction to an "AI system," or a

human "expert." We find that participants follow these prediction—even though they are random—

both

when the predictions are attributed to “AI”, and to "experts" (compared with a control group

that

receives no prediction). This has serious implications for AI in decision making: if random

decision

support can influence a stakeholder’s behavior, it is easy to imagine that adversarial AI

systems

can easily manipulate a stakeholder’s decisions. Furthermore, if AI tools are both trained on

and

influence stakeholders’ behavior, it is difficult to define what a "correct" decision is.

Publications ∨

Publications ∧

-

Chan, Lok, Kenzie Doyle, Duncan C McElfresh, Vincent Conitzer, John P

Dickerson,

Jana

Schaich Borg and Walter Sinnott-Armstrong. “Artificial Artificial Intelligence:

Measuring

Influence of AI "Assessments" on Moral Decision-Making.” AAAI/ACM Conference on

Artificial Intelligence, Ethics, and Society (AIES),

2020.